FaceFan that can recognize your face with opencv

Contents

Make things

The fan that detects your face and rotate with iPhone camera.

How it works

Materials

- Smartphone(We use an iPhone, but also Android is ok)

- obniz Board

- Servo motor

- Small USB Fan

- USB <-> Pin header

- Pin header

- Cable

- USB-Cable

- USB-Adaptor

How to make

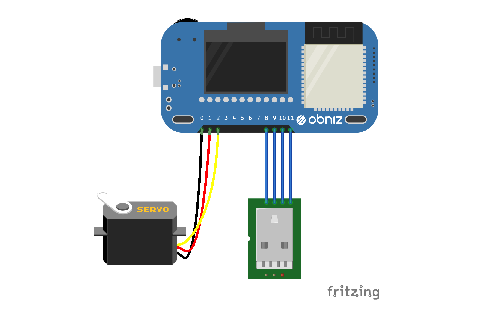

Hardware Connection

Only 3 Steps is here.

- Wired obniz and a servo motor

- Connect Small USB Fan to USB <-> Pin header, and wired it to obniz.

- Connect obniz to Power supply.

Software

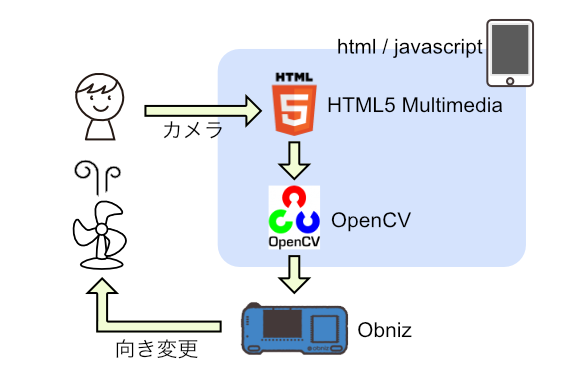

It uses HTML5 MultiMedia to use iPone camera.

And detects a face with OpenCV.js.

- With HTML5 MultiMedia , get your camera data.

- Face detect with camera data and OpenCV.

- Detect x position of your face and rotate the servo motor.

1. With HTML5 MultiMedia , get your camera data.

Use navigator.getUserMedia() , browser ask user to permit. After that, callback parameter stream are set to vide tag, and display camera pic on html

It works only in HTTPS protocol .

<video id="videoInput" autoplay playsinline width=320 height=240>

<button id="startAndStop">Start</button>

<script>

let videoInput = document.getElementById('videoInput');

let startAndStop = document.getElementById('startAndStop');

startAndStop.addEventListener('click', () => {

if (!streaming) {

navigator.mediaDevices = navigator.mediaDevices || ((navigator.mozGetUserMedia || navigator.webkitGetUserMedia) ? {

getUserMedia: function (c) {

return new Promise(function (y, n) {

(navigator.mozGetUserMedia ||

navigator.webkitGetUserMedia).call(navigator, c, y, n);

});

}

} : null);

if (!navigator.mediaDevices) {

console.log("getUserMedia() not supported.");

return;

}

const medias = {

audio: false,

video: {

facingMode: "user"

}

};

navigator.mediaDevices.getUserMedia(medias)

.then(function (stream) {

streaming = true;

var video = document.getElementById("videoInput");

video.src = window.URL.createObjectURL(stream);

video.onloadedmetadata = function (e) {

video.play();

onVideoStarted();

};

})

.catch(function (err) {

console.error('mediaDevice.getUserMedia() error:' + (error.message || error));

});

} else {

utils.stopCamera();

onVideoStopped();

}

});

function onVideoStarted() {

startAndStop.innerText = 'Stop';

// ...

}

function onVideoStopped() {

startAndStop.innerText = 'Start';

// ...

}

</script>

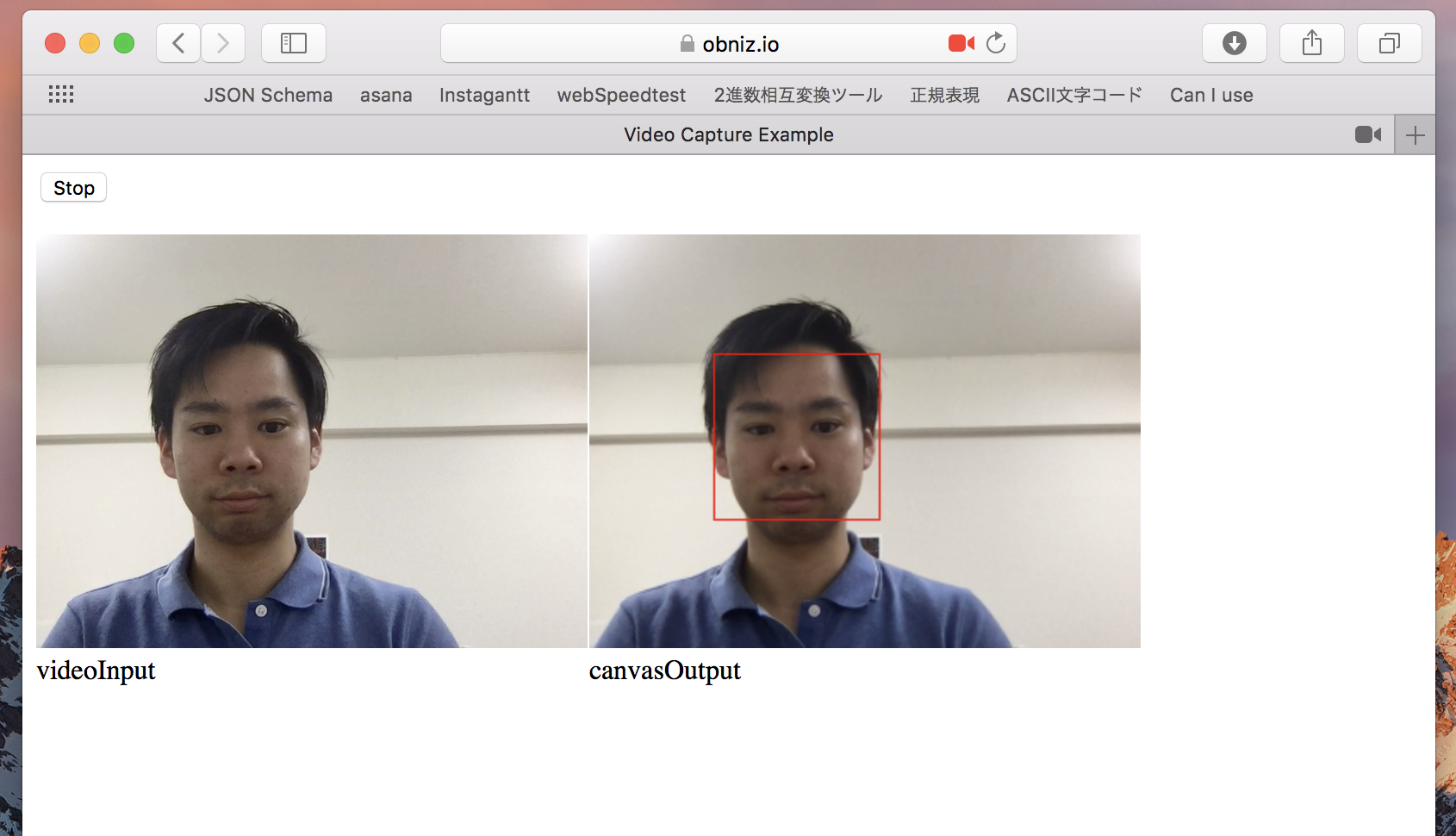

2. Face detect with camera data and OpenCV.

We use OpenCV Sample. haarcascade_frontalface_default.xml is face data, and we use for detect face.

<canvas id="canvasOutput" width=320 height=240 style="-webkit-font-smoothing:none">

<script src="https://docs.opencv.org/3.4/opencv.js"></script>

<script src="https://webrtc.github.io/adapter/adapter-5.0.4.js" type="text/javascript"></script>

<script src="https://docs.opencv.org/3.4/utils.js" type="text/javascript"></script>

<script>

let streaming = false;

function onVideoStopped() {

streaming = false;

canvasContext.clearRect(0, 0, canvasOutput.width, canvasOutput.height);

startAndStop.innerText = 'Start';

}

let utils = new Utils('errorMessage');

let faceCascadeFile = 'haarcascade_frontalface_default.xml';

utils.createFileFromUrl(faceCascadeFile, 'https://raw.githubusercontent.com/opencv/opencv/master/data/haarcascades/haarcascade_frontalface_default.xml', () => {

startAndStop.removeAttribute('disabled');

});

async function start() {

let video = document.getElementById('videoInput');

let src = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let dst = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let gray = new cv.Mat();

let cap = new cv.VideoCapture(video);

let faces = new cv.RectVector();

let classifier = new cv.CascadeClassifier();

let result = classifier.load("haarcascade_frontalface_default.xml");

const FPS = 30;

function processVideo() {

try {

if (!streaming) {

// clean and stop.

src.delete();

dst.delete();

gray.delete();

faces.delete();

classifier.delete();

return;

}

let begin = Date.now();

// start processing.

cap.read(src);

src.copyTo(dst);

cv.cvtColor(dst, gray, cv.COLOR_RGBA2GRAY, 0);

// detect faces.

classifier.detectMultiScale(gray, faces, 1.1, 3, 0);

// draw faces.

for (let i = 0; i < faces.size(); ++i) {

let face = faces.get(i);

let point1 = new cv.Point(face.x, face.y);

let point2 = new cv.Point(face.x + face.width, face.y + face.height);

cv.rectangle(dst, point1, point2, [255, 0, 0, 255]);

}

cv.imshow('canvasOutput', dst);

// schedule the next one.

let delay = 1000 / FPS - (Date.now() - begin);

setTimeout(processVideo, delay);

} catch (err) {

console.error(err);

}

};

// schedule the first one.

setTimeout(processVideo, 0);

}

</script>

3. Detect x position of your face and rotate the servo motor.

The code is easy. Only need is that where you wired obniz to servo motor and usb, and how rotate the servo motor.

new Obniz("OBNIZ_ID_HERE"); is the code of connection to obniz.

let obniz = new Obniz("OBNIZ_ID_HERE");

let servo;

obniz.onconnect = async () => {

obniz.display.print("ready")

var usb = obniz.wired("USB" , {gnd:11, vcc:8} );

usb.on();

servo = obniz.wired("ServoMotor", {signal:0,vcc:1, gnd:2});

}

if(/* when detect face */){

servo.angle(xPos * 180 / 320);

}